The importance of data centers for society has changed. Public life, economy, and society as a whole depend to a very large extend on the proper functioning of data centers, and they can be seen as a critical infrastructure that is also intertwined with other critical infrastructures. This creates societal and “moral” pressure and obligation to demonstrate leadership in creating a sustainable society.

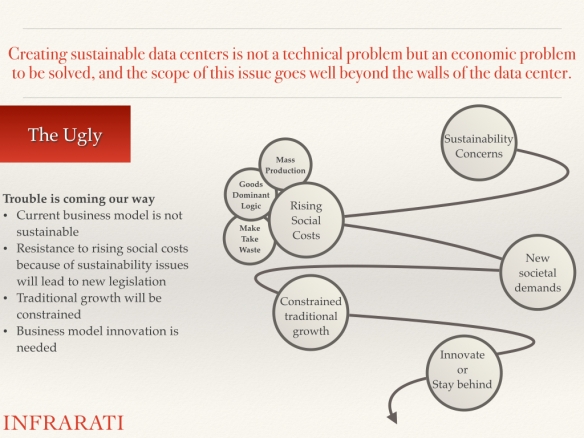

It is an endeavor to create a sustainable data center; a data center that is environmentally viable, economically equitable, and socially bearable. That is because it is not a technical problem but an organizational and economic problem that has to be solved. The scope of this issue goes well beyond the walls of the data center.

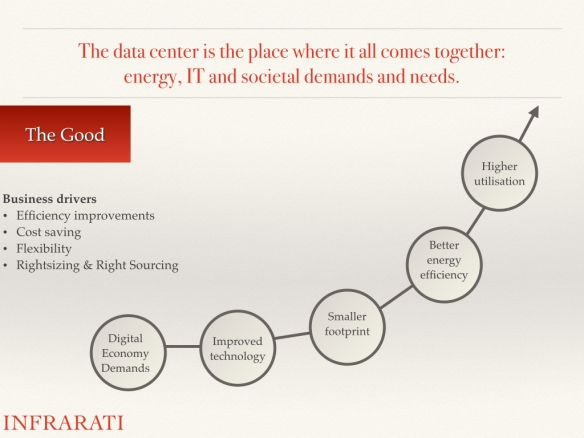

There are lots of opportunities to improve the efficiency in the IT and data center supply chain. Doing more with less by removing inefficiency can help to reduce the rate of resource depletion and emission and e-waste. Energy efficiency improvements downstream can lead to enormous improvements for the whole supply chain because of multiplier effects upstream. Replacing carbon-based electricity with electricity based on renewable energy and hydro energy sources can bring CO2 emissions to zero. But that is not good enough.

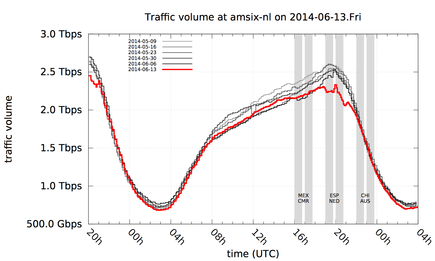

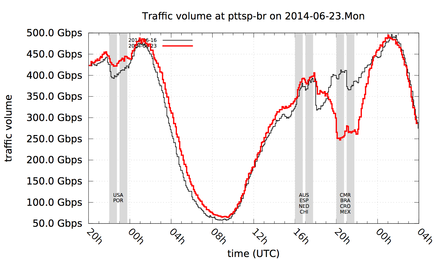

We have to deal with the “Jevons paradox,” where increases in the efficiency of using a resource tend to increase the usage of that resource and the trends of “digitization of everything” and “anytime, anywhere, anyone connected.” These trends cause a staggering demand for digital services that will be delivered mostly by data centers.

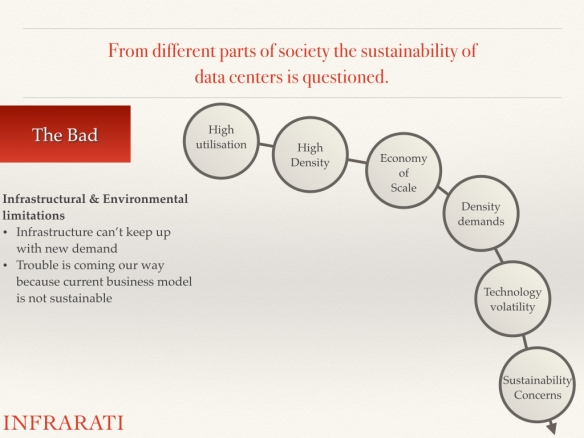

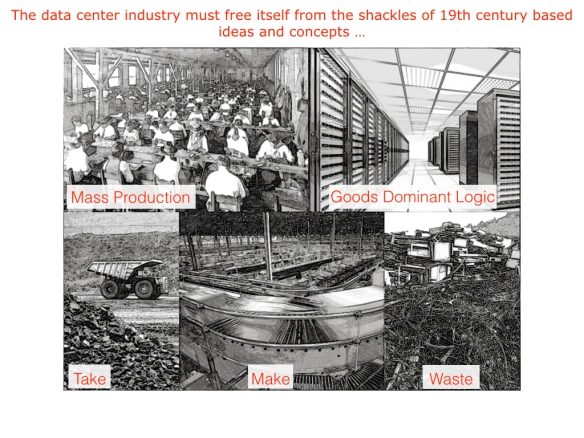

The demand and growth will be unsustainable if we continue to use the old industrial production system based on nineteenth century ideas and concepts of make, take and waste.

If data center suppliers and IT organizations understand the necessity of sustainable production and want to fulfill the growing demand of digital services, then they have to change.

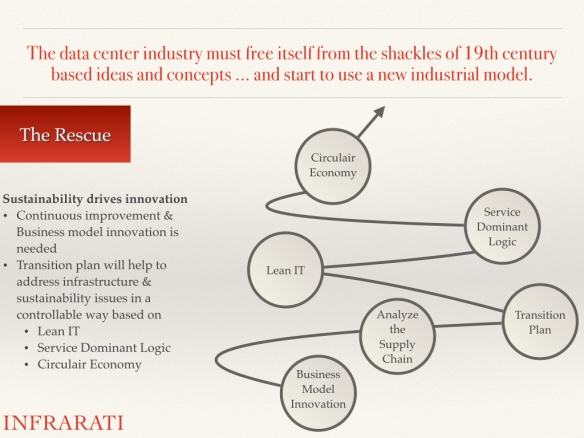

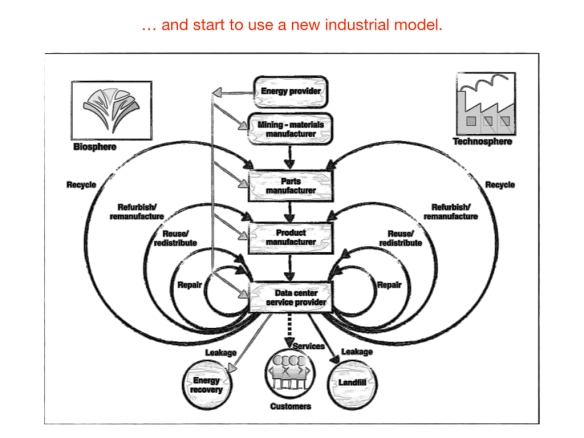

They have to change to a more sophisticated industrial production system by closing the loop: convert the linear production system to a circular system based on use, reuse, remanufacturing, and recycling. Focusing on performance and value in terms of customer-determined benefits will also create the need to make a transition from a goods-dominant logic to a service-dominant logic where suppliers deliver services not goods.

The philosophy of cradle to cradle and service-dominant logic fit very well together by selling results rather than equipment and performance and satisfaction rather than products. To make this possible, one has to broaden his scope beyond the data center. The supply chain should be tightly integrated. The supply chain has to be co-designed and co-developed with the suppliers and customers based on customer pull instead of supplier push.

Creating a sustainable data center calls for innovation. It, therefore, needs a multidisciplinary approach and different views and perspectives, within and between organizations, in order to close the loop and create a sustainable supply chain.

To create sustainable data centers, seven activities can be defined:

- Moving toward zero waste: at first the focus should be on the internal efficiency and later on the customer must be involved to reduce underutilization and overprovisioning, and life cycle analysis must be implemented.

- Increasingly diminish emissions along the supply chain: identify and evaluate externalities/social costs and act on this by creating sustainable procurement policies.

- Increasing efficiency and using more and more renewable energy: introduction of energy management, renewable energy deals with power suppliers, use of local renewable energy, and introduction of the emergy concept.

- Closing-loop recycling: take back procurement policy, introduction of reverse logistics, and “design for the environment.”

- Resource efficient placement and transportation: reevaluation of data center centralization and economy of scale concept versus economy of repetition and distributed data center concept.

- Creating commitment: involvement of stakeholders in the transformation to a new industrial production system.

- Redesign commerce: conversion to service-dominant logic and supply chain integration downstream and upstream by co-design and co-production.

Is this endeavor impossible? I don’t think so. It is more a question of commitment. Rethink the “data center equation” of “people, planet, profit” and prepare yourself and your organization to climb Mount Sustainability.

For more information read “Data center 2.0: The sustainable data center”

http://www.amazon.com/Data-Center-2-0-The-Sustainable/dp/1499224680